Probability density is the relationship between observations and their probability.

Some outcomes of a random variable will have low probability density and other outcomes will have a high probability density.

The overall shape of the probability density is referred to as a probability distribution, and the calculation of probabilities for specific outcomes of a random variable is performed by a probability density function, or PDF for short.

It is useful to know the probability density function for a sample of data in order to know whether a given observation is unlikely, or so unlikely as to be considered an outlier or anomaly and whether it should be removed.

It is also helpful in order to choose appropriate learning methods that require input data to have a specific probability distribution.

It is unlikely that the probability density function for a random sample of data is known. As such, the probability density must be approximated using a process known as probability density estimation.

This tutorial is divided into three parts; they are:

- Summarize Density With a Histogram

- Parametric Density Estimation

- Nonparametric Density Estimation

1. Summarize Density With a Histogram

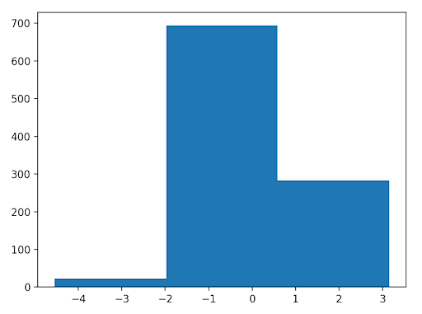

The first step in density estimation is to create a histogram of the observations in the random sample.

A histogram is a plot that involves first grouping the observations into bins and counting the number of events that fall into each bin.

The counts, or frequencies of observations, in each bin are then plotted as a bar graph with the bins on the x-axis and the frequency on the y-axis.

The choice of the number of bins is important as it controls the coarseness of the distribution (number of bars) and, in turn, how well the density of the observations is plotted.

It is a good idea to experiment with different bin sizes for a given data sample to get multiple perspectives or views on the same data.

For example, observations between 1 and 100 could be split into 3 bins (1-33, 34-66, 67-100), which might be too coarse, or 10 bins (1-10, 11-20, ..., 91-100), which might better capture the density.

The normal() NumPy function will achieve this and we will generate 1,000 samples with a mean of 0 and a standard deviation of 1, e.g. a standard Gaussian,

# example of plotting a histogram of a random sample

from matplotlib import pyplot

from numpy.random import normal

# generate a sample

sample = normal(size=1000)

# plot a histogram of the sample

pyplot.hist(sample, bins=10)

pyplot.show()

from matplotlib import pyplot

from numpy.random import normal

# generate a sample

sample = normal(size=1000)

# plot a histogram of the sample

pyplot.hist(sample, bins=10)

pyplot.show()

-----Result-----

|

| Histogram Plot With 10 Bins of a Random Data Sample

|

|

| Histogram Plot With 3 Bins of a Random Data Sample

|

Reviewing a histogram of a data sample with a range of different numbers of bins will help to identify whether the density looks like a common probability distribution or not.

In most cases, you will see a unimodal distribution, such as the familiar bell shape of the normal, the flat shape of the uniform, or the descending or ascending shape of an exponential or Pareto distribution.

You might also see complex distributions, such as two peaks that don’t disappear with different numbers of bins, referred to as a bimodal distribution, or multiple peaks, referred to as a multimodal distribution.

You might also see a large spike in density for a given value or small range of values indicating outliers, often occurring on the tail of a distribution far away from the rest of the density.

2. Parametric Density Estimation

The shape of a histogram of most random samples will match a well-known probability distribution.

The common distributions are common because they occur again and again in different and sometimes unexpected domains.

Get familiar with the common probability distributions as it will help you to identify a given distribution from a histogram.

Once identified, you can attempt to estimate the density of the random variable with a chosen probability distribution.

This can be achieved by estimating the parameters of the distribution from a random sample of data.

For example, the normal distribution has two parameters: the mean and the standard deviation. Given these two parameters, we now know the probability distribution function. These parameters can be estimated from data by calculating the sample mean and sample standard deviation. We refer to this process as parametric density estimation.

Once we have estimated the density, we can check if it is a good fit. This can be done in many ways, such as:

- Plotting the density function and comparing the shape to the histogram.

- Sampling the density function and comparing the generated sample to the real sample.

- Using a statistical test to confirm the data fits the distribution.

# example of parametric probability density estimation

from matplotlib import pyplot

from numpy.random import normal

from numpy import mean

from numpy import std

from scipy.stats import norm

# generate a sample

sample = normal(loc=50, scale=5, size=1000)

# calculate parameters

sample_mean = mean(sample)

sample_std = std(sample)

print('Mean=%.3f, Standard Deviation=%.3f' % (sample_mean, sample_std))

# define the distribution

dist = norm(sample_mean, sample_std)

# sample probabilities for a range of outcomes

values = [value for value in range(30, 70)]

probabilities = [dist.pdf(value) for value in values]

# plot the histogram and pdf

pyplot.hist(sample, bins=10, density=True)

pyplot.plot(values, probabilities)

pyplot.show()

from matplotlib import pyplot

from numpy.random import normal

from numpy import mean

from numpy import std

from scipy.stats import norm

# generate a sample

sample = normal(loc=50, scale=5, size=1000)

# calculate parameters

sample_mean = mean(sample)

sample_std = std(sample)

print('Mean=%.3f, Standard Deviation=%.3f' % (sample_mean, sample_std))

# define the distribution

dist = norm(sample_mean, sample_std)

# sample probabilities for a range of outcomes

values = [value for value in range(30, 70)]

probabilities = [dist.pdf(value) for value in values]

# plot the histogram and pdf

pyplot.hist(sample, bins=10, density=True)

pyplot.plot(values, probabilities)

pyplot.show()

-----Result-----

Mean=49.852, Standard Deviation=5.023

It is possible that the data does match a common probability distribution, but requires a transformation before parametric density estimation.

For example, you may have outlier values that are far from the mean or center of mass of the distribution.

This may have the effect of giving incorrect estimates of the distribution parameters and, in turn, causing a poor fit to the data.

These outliers should be removed prior to estimating the distribution parameters.

Another example is the data may have a skew or be shifted left or right.

In this case, you might need to transform the data prior to estimating the parameters, such as taking the log or square root, or more generally, using a power transform like the Box-Cox transform.

These types of modifications to the data may not be obvious and effective parametric density estimation may require an iterative process of:

Loop Until Fit of Distribution to Data is Good Enough:

- Estimating distribution parameters

- Reviewing the resulting PDF against the data

- Transforming the data to better fit the distribution

3. Nonparametric Density Estimation

In some cases, a data sample may not resemble a common probability distribution or cannot be easily made to fit the distribution.

This is often the case when the data has two peaks (bimodal distribution) or many peaks (multimodal distribution).

In this case, parametric density estimation is not feasible and alternative methods can be used that do not use a common distribution.

Instead, an algorithm is used to approximate the probability distribution of the data without a pre-defined distribution, referred to as a nonparametric method.

The distributions will still have parameters but are not directly controllable in the same way as simple probability distributions.

Perhaps the most common nonparametric approach for estimating the probability density function of a continuous random variable is called kernel smoothing, or kernel density estimation, KDE for short.

Kernel Density Estimation: Nonparametric method for using a dataset to estimating probabilities for new points.

- Smoothing Parameter (bandwidth): Parameter that controls the number of samples or window of samples used to estimate the probability for a new point.

- Basis Function (kernel): The function chosen used to control the contribution of samples in the dataset toward estimating the probability of a new point.

We can demonstrate this with an example. First, we can construct a bimodal distribution by combining samples from two different normal distributions. Specifically, 300 examples with a mean of 20 and a standard deviation of 5 (the smaller peak), and 700 examples with a mean of 40 and a standard deviation of 5 (the larger peak).

The scikit-learn machine learning library provides the KernelDensity class that implements kernel density estimation. First, the class is constructed with the desired bandwidth (window size) and kernel (basis function) arguments.

It is a good idea to test different configurations on your data. In this case, we will try a bandwidth of 2 and a Gaussian kernel. The class is then fit on a data sample via the fit() function.

The function expects the data to have a 2D shape with the form [rows, columns], therefore we can reshape our data sample to have 1,000 rows

and 1 column.

and 1 column.

We can then evaluate how well the density estimate matches our data by calculating the probabilities for a range of observations and comparing the shape to the histogram, just like we did for the parametric case in the prior section.

The score samples() function on the KernelDensity will calculate the log probability for an array of samples.

We can create a range of samples from 1 to 60, about the range of our domain, calculate the log probabilities, then invert the log operation by calculating the exponent or exp() to return the values to the range 0-1 for normal probabilities.

#example of kernel density estimation for a bimodal data sample

from matplotlib import pyplot

from numpy.random import normal

from numpy import hstack

from numpy import asarray

from numpy import exp

from sklearn.neighbors import KernelDensity

# generate a sample

sample1 = normal(loc=20, scale=5, size=300)

from matplotlib import pyplot

from numpy.random import normal

from numpy import hstack

from numpy import asarray

from numpy import exp

from sklearn.neighbors import KernelDensity

# generate a sample

sample1 = normal(loc=20, scale=5, size=300)

sample2 = normal(loc=40, scale=5, size=700)

sample = hstack((sample1, sample2))

# fit density

model = KernelDensity(bandwidth=2, kernel='gaussian')

sample = sample.reshape((len(sample), 1))

model.fit(sample)

# sample probabilities for a range of outcomes

values = asarray([value for value in range(1, 60)])

values = values.reshape((len(values), 1))

probabilities = model.score_samples(values)

probabilities = exp(probabilities)

# plot the histogram and pdf

pyplot.hist(sample, bins=50, density=True)

pyplot.plot(values[:], probabilities)

pyplot.show()

sample = hstack((sample1, sample2))

# fit density

model = KernelDensity(bandwidth=2, kernel='gaussian')

sample = sample.reshape((len(sample), 1))

model.fit(sample)

# sample probabilities for a range of outcomes

values = asarray([value for value in range(1, 60)])

values = values.reshape((len(values), 1))

probabilities = model.score_samples(values)

probabilities = exp(probabilities)

# plot the histogram and pdf

pyplot.hist(sample, bins=50, density=True)

pyplot.plot(values[:], probabilities)

pyplot.show()

-----Result-----

|

| Histogram and Probability Density Function Plot Estimated via Kernel Density Estimation for a Bimodal Data Sample. |

The KernelDensity class is powerful and does support estimating the PDF for multidimensional data.

In this case, we can see that the PDF is a good fit for the histogram. It is not very smooth and could be made more so by setting the bandwidth argument to 3 samples or higher. Experiment with different values of the bandwidth and the kernel function.

No comments:

Post a Comment