Examples of classification problems include:

- Given an example, classify if it is spam or note

- Given a handwritten character, classify if as one of known characters

- Given recent user behavior, classify as churn or not

Classification requires a training dataset with many examples of inputs and outputs from which to learn.

A model will use the training dataset and will calculate how to best map examples of input data to specific class labels. As such, the training dataset must be sufficiently representative of the problems and have many examples of each class label.

There are 4 main types of classification tasks that you may encounter; they are:

- Binary Classification

- Multi-Class Classification

- Multi-Label Classification

- Imbalanced Classification

Binary Classification

Binary Classification refers to classification tasks that have two class labels.

Examples:

- Email spam detection(spam or not)

- Churn prediction (churn or not)

- Conversion prediction (buy or not)

Typically, binary classification tasks involve one class that is the normal state and another class that is abnormal state.

The class for normal state is assigned the class label 0 and the class with abnormal state is assigned the class label 1.

It is common to model a binary classification task with a model that predicts a Bernoulli probability distribution for each example.

Popular algorithm that can be used for binary classification includes:

- Logistic algorithm

- k-Nearest Neighbors

- Decision Trees

- Support Vector Machine

- Naive Bayes

Some algorithms are specifically designed for binary classification and do not natively support more than two classes; examples include Logistic Regression and Support Vector Machine.

Multi-Class Classification

Multi-Class Classification refers to classification tasks that have more than two class labels.

Examples include:

- Face classification

- Plant species classification

- Optical character classification

The number of class labels may be very large on some problems. For example, one model may predict a photo as belonging to one among thousands or ten thousands of faces in a face recognition system.

It is common to model a multi-class classification task with a model that predicts a Multinoulli probability distribution for each example.

Popular algorithm that can be used for multi-class classification include:

- k-Nearest neighbors

- Decision Trees

- Naive Bayes

- Random Forest

- Gradient Boosting

Algorithms that are designed for binary classification can be adapted for use for multi-class problems.

- One-vs-Rest: Fit one binary classification model for each class vs all other classes.

- One-vs-One: Fit one binary classification for each pair of classes.

Binary classification that can be use these strategies for multi-class classification include:

- Logistic Regression

- Suport Vector Machine

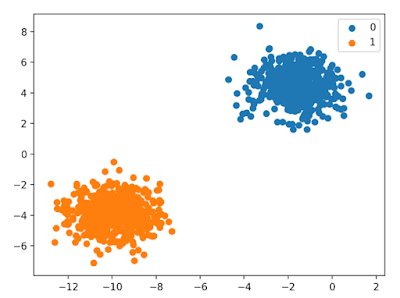

|

| Scatter Plot of Multi-Class Classification Dataset |

Multi-Label Classification

Multi-label classification refers to those classification tasks that have two or more class labels, where one or more class labels may be predicted for each example.

Consider the example of photo classification, where a given photo may have multiple objects in the scene and the model may predict the presence of multiple known objects in the photo, such as "bicycle", "apple", "person", etc.

This is unlike binary classification and multi-classification, where a single class label is predicted for each example.

It is common to model multi-label classification tasks with a model that predicts multiple outputs, with each output taking predicted as a Bernoulli probability distribution. This is essentially a model that makes multiple binary classification predictions for each example.

Specialized versions of standard classification algorithms can be used, so-called multi-label versions of the algorithms, including:

- Multi-label Decision Trees

- Multi-label Random Forests

- Multi-label Gradient Boosting

Imbalanced Classification

Imbalanced Classification refers to classification tasks where the number of examples in each class is unequally distributed.

Typically, imbalanced Classification tasks are binary classification task where the majority of examples in the training dataset belong to the normal class and a minority of examples belong to the abnormal class.

Example include:

- Fraud detection

- Outlier detection

- Medical diagnostic tests.

Specialized techniques may be used to change the composition of samples in the training dataset by undersampling the majority class or oversampling the minority class.

Example include:

- Random Undersampling

- SMOTE Oversampling

Specialized modeling algorithms may be used that pay more attention to the minority class when fitting the model on the training dataset, such as cost-sensitive machine learning algorithms.

Examples include:

- Cost-sensitive Logistic Regression

- Cost-sensitive Decision Trees

- Cost-sensitive Support Vector Machines

Finally, alternative performance metrics may be required as reporting the classification accuracy may be misleading.

Example include:

- Precision

- Recall

- F-Measure

References:

No comments:

Post a Comment