In this tutorial, you will discover basic data cleaning methods. After completing this tutorial, you will know:

- How to identify and remove column variables that only have a single value.

- How to identify and consider column variables with very few unique values.

- How to identify and remove rows that contain that duplicate observations.

This tutorial is divided into seven parts; they are:

- Messy datasets

- Identify columns that contain a single value

- Delete columns that contain a single value

- Consider columns that have very few value

- Remove columns that have a low variance

- Identify rows that contain duplicate data

- Delete rows that contain duplicate data

A. Messy Data

Before we dive into identifying and correcting messy data, let's define some messy datasets. We will use two datasets as the basic for this tutorial, the oil spill dataset and iris flowers datasets.

1. Oil Spill Dataset

There are 937 cases. Each case is comprised of 48 numerical computer vision derived features, a patch number, and a class label. The normal case is no oil spill assigned the class label of 0, whereas an oil spill is indicated by a class label of 1. There are 896 cases for no oil spill and 41 cases of an oil spill.

Review the contents of the data file. We can see that the first column contains integers for the patch number. We can also see that the computer vision derived features are real-valued with differing scales such as thousands in the second column and fractions in other columns. This dataset contains columns with very few unique values that provides a good basis for data cleaning.

2. Iris Flowers Dataset

There are 150 observations with 4 input variables and 1 output variable. You can access the entire dataset here:

We can see that all four input variables are numeric and that the target class variable is a string representing the iris flower species. This dataset contains duplicate rows that provides a good basis for data cleaning.

B. Identify columns that contain a single value

A single value means that each row for that column has the same value. For example, the column X1 has the value 1.0 for all rows in the dataset.

|

| Example of a column that has a single value |

Columns that have a single value for all row do not contain any information for modeling.

Depending on the choice of data preparation and modeling algorithms, variables with a single value can also cause errors or unexpected results.

You can detect rows that have this property using the unique() NumPy function that will report the number of unique values in each column.

# summarize the number of unique values for each column using numpy

from numpy import loadtxt

from numpy import unique

# load the dataset

data = loadtxt('oil-spill.csv', delimiter=',')

# summarize the number of unique values in each column

for i in range(data.shape[1]):

print(i, len(unique(data[:, i])))

from numpy import loadtxt

from numpy import unique

# load the dataset

data = loadtxt('oil-spill.csv', delimiter=',')

# summarize the number of unique values in each column

for i in range(data.shape[1]):

print(i, len(unique(data[:, i])))

A simpler approach is to use the nunique() Pandas function that does the hard work for you.

# summarize the number of unique values for each column using numpy

from pandas import read_csv

# load the dataset

df = read_csv('oil-spill.csv', header=None)

# summarize the number of unique values in each column

print(df.nunique())

from pandas import read_csv

# load the dataset

df = read_csv('oil-spill.csv', header=None)

# summarize the number of unique values in each column

print(df.nunique())

-----Result-----

|

| Example a simpler approach to reporting the number of unique values in each column |

We can see that column index 22 only has a single value and should be removed.

C. Delete Columns That Contain a Single Value

Variables or columns that have a single value should probably be removed from your dataset.

# delete columns with a single unique value

from pandas import read_csv

# load the dataset

df = read_csv('oil-spill.csv', header=None)

print(df.shape)

# get number of unique values for each column

counts = df.nunique()

# record columns to delete

to_del = [i for i,v in enumerate(counts) if v == 1]

from pandas import read_csv

# load the dataset

df = read_csv('oil-spill.csv', header=None)

print(df.shape)

# get number of unique values for each column

counts = df.nunique()

# record columns to delete

to_del = [i for i,v in enumerate(counts) if v == 1]

print(to_del)

# drop useless columns

df.drop(to_del, axis=1, inplace=True)

print(df.shape)

# drop useless columns

df.drop(to_del, axis=1, inplace=True)

print(df.shape)

-----Result-----

(937, 50)

[22]

(937, 49)

[22]

(937, 49)

D. Consider Columns That Have Very Few Values

In the previous section, we saw that some columns in the example dataset had very few unique values. For example, there were columns that only had 2, 4, and 9 unique values. This might make sense for ordinal or categorical variables.

We can refer to these columns or predictors as near-zero variance predictors, as their variance is not zero, but a very small number close to zero.

These columns may or may not contribute to the skill of a model. We can’t assume that they are useless to modeling.

To help highlight columns of this type, you can calculate the number of unique values for each variable as a percentage of the total number of rows in the dataset.

# summarize the percentage of unique values for each column using numpy

from numpy import loadtxt

from numpy import unique

# load the dataset

data = loadtxt('oil-spill.csv', delimiter=',')

# summarize the number of unique values in each column

for i in range(data.shape[1]):

num = len(unique(data[:, i]))

percentage = float(num) / data.shape[0] * 100

if percentage < 1:

print('%d, %d, %.1f%%' % (i, num, percentage))

from numpy import loadtxt

from numpy import unique

# load the dataset

data = loadtxt('oil-spill.csv', delimiter=',')

# summarize the number of unique values in each column

for i in range(data.shape[1]):

num = len(unique(data[:, i]))

percentage = float(num) / data.shape[0] * 100

if percentage < 1:

print('%d, %d, %.1f%%' % (i, num, percentage))

-----Result-----

|

| Example output from reporting on columns with low variance

|

This does not mean that these rows and columns should be deleted, but they require further attention. For example:

- Perhaps the unique values can be encoded as ordinal values?

- Perhaps the unique values can be encoded as categorical values?

- Perhaps compare model skill with each variable removed from the dataset?

# delete columns where number of unique values is less than 1% of the rows

from pandas import read_csv

# load the dataset

df = read_csv('oil-spill.csv', header=None)

print(df.shape)

# get number of unique values for each column

counts = df.nunique()

# record columns to delete

to_del = [i for i,v in enumerate(counts) if (float(v)/df.shape[0]*100) < 1]

print(to_del)

# drop useless columns

df.drop(to_del, axis=1, inplace=True)

print(df.shape)

from pandas import read_csv

# load the dataset

df = read_csv('oil-spill.csv', header=None)

print(df.shape)

# get number of unique values for each column

counts = df.nunique()

# record columns to delete

to_del = [i for i,v in enumerate(counts) if (float(v)/df.shape[0]*100) < 1]

print(to_del)

# drop useless columns

df.drop(to_del, axis=1, inplace=True)

print(df.shape)

-----Result-----

(937, 50)

[21, 22, 24, 25, 26, 32, 36, 38, 39, 45, 49]

(937, 39)

[21, 22, 24, 25, 26, 32, 36, 38, 39, 45, 49]

(937, 39)

E. Remove Columns That Have A Low Variance

Another approach to the problem of removing columns with few unique values is to consider the variance of the column. Recall that the variance is a statistic calculated on a variable as the average squared difference of values in the sample from the mean. The variance can be used as a filter for identifying columns to be removed from the dataset. A column that has a single value has a variance of 0.0, and a column that has very few unique values may have a small variance.

The VarianceThreshold class from the scikit-learn library supports this as a type of feature selection. An instance of the class can be created and we can specify the threshold argument, which defaults to 0.0 to remove columns with a single value.

# example of applying the variance threshold for feature selection

from pandas import read_csv

from sklearn.feature_selection import VarianceThreshold

# load the dataset

df = read_csv('oil-spill.csv', header=None)

# split data into inputs and outputs

data = df.values

X = data[:, :-1]

y = data[:, -1]

print(X.shape, y.shape)

# define the transform

transform = VarianceThreshold()

# transform the input data

X_sel = transform.fit_transform(X)

print(X_sel.shape)

from pandas import read_csv

from sklearn.feature_selection import VarianceThreshold

# load the dataset

df = read_csv('oil-spill.csv', header=None)

# split data into inputs and outputs

data = df.values

X = data[:, :-1]

y = data[:, -1]

print(X.shape, y.shape)

# define the transform

transform = VarianceThreshold()

# transform the input data

X_sel = transform.fit_transform(X)

print(X_sel.shape)

-----Result-----

(937, 49) (937,)

(937, 48)

(937, 48)

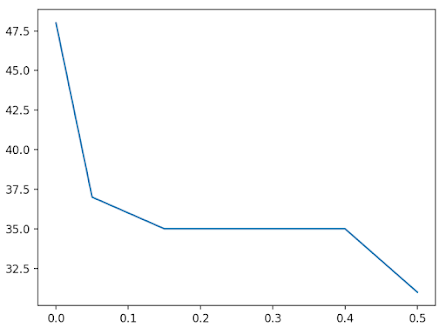

# explore the effect of the variance thresholds on the number of selected features

from numpy import arange

from pandas import read_csv

from sklearn.feature_selection import VarianceThreshold

from matplotlib import pyplot

# load the dataset

df = read_csv('oil-spill.csv', header=None)

# split data into inputs and outputs

data = df.values

X = data[:, :-1]

y = data[:, -1]

print(X.shape, y.shape)

# define thresholds to check

thresholds = arange(0.0, 0.55, 0.05)

# apply transform with each threshold

results = list()

for t in thresholds:

# define the transform

transform = VarianceThreshold(threshold=t)

# transform the input data

X_sel = transform.fit_transform(X)

# determine the number of input features

n_features = X_sel.shape[1]

print('>Threshold=%.2f, Features=%d' % (t, n_features))

# store the result

results.append(n_features)

# plot the threshold vs the number of selected features

pyplot.plot(thresholds, results)

pyplot.show()

from numpy import arange

from pandas import read_csv

from sklearn.feature_selection import VarianceThreshold

from matplotlib import pyplot

# load the dataset

df = read_csv('oil-spill.csv', header=None)

# split data into inputs and outputs

data = df.values

X = data[:, :-1]

y = data[:, -1]

print(X.shape, y.shape)

# define thresholds to check

thresholds = arange(0.0, 0.55, 0.05)

# apply transform with each threshold

results = list()

for t in thresholds:

# define the transform

transform = VarianceThreshold(threshold=t)

# transform the input data

X_sel = transform.fit_transform(X)

# determine the number of input features

n_features = X_sel.shape[1]

print('>Threshold=%.2f, Features=%d' % (t, n_features))

# store the result

results.append(n_features)

# plot the threshold vs the number of selected features

pyplot.plot(thresholds, results)

pyplot.show()

-----Result-----

(937, 49) (937,)

>Threshold=0.00, Features=48

>Threshold=0.05, Features=37

>Threshold=0.10, Features=36

>Threshold=0.15, Features=35

>Threshold=0.20, Features=35

>Threshold=0.25, Features=35

>Threshold=0.30, Features=35

>Threshold=0.35, Features=35

>Threshold=0.40, Features=35

>Threshold=0.45, Features=33

>Threshold=0.50, Features=31

>Threshold=0.00, Features=48

>Threshold=0.05, Features=37

>Threshold=0.10, Features=36

>Threshold=0.15, Features=35

>Threshold=0.20, Features=35

>Threshold=0.25, Features=35

>Threshold=0.30, Features=35

>Threshold=0.35, Features=35

>Threshold=0.40, Features=35

>Threshold=0.45, Features=33

>Threshold=0.50, Features=31

|

| Line Plot of Variance Threshold Versus Number of Selected Features

|

F. Identify Rows That Contain Duplicate Data

# locate rows of duplicate data

from pandas import read_csv

# load the dataset

df = read_csv('iris.csv', header=None)

# calculate duplicates

dups = df.duplicated()

# report if there are any duplicates

print(dups.any())

# list all duplicate rows

print(df[dups])

from pandas import read_csv

# load the dataset

df = read_csv('iris.csv', header=None)

# calculate duplicates

dups = df.duplicated()

# report if there are any duplicates

print(dups.any())

# list all duplicate rows

print(df[dups])

----Result-----

True

0 1 2 3 4

34 4.9 3.1 1.5 0.1 Iris-setosa

37 4.9 3.1 1.5 0.1 Iris-setosa

142 5.8 2.7 5.1 1.9 Iris-virginica

0 1 2 3 4

34 4.9 3.1 1.5 0.1 Iris-setosa

37 4.9 3.1 1.5 0.1 Iris-setosa

142 5.8 2.7 5.1 1.9 Iris-virginica

G. Delete Rows That Contain Duplicate Data

# delete rows of duplicate data from the dataset

from pandas import read_csv

# load the dataset

df = read_csv('iris.csv', header=None)

print(df.shape)

# delete duplicate rows

df.drop_duplicates(inplace=True)

print(df.shape)

from pandas import read_csv

# load the dataset

df = read_csv('iris.csv', header=None)

print(df.shape)

# delete duplicate rows

df.drop_duplicates(inplace=True)

print(df.shape)

-----Result-----

(150, 5)

(147, 5)

(147, 5)

No comments:

Post a Comment