Sometimes a dataset can contain extreme values that are outside the range of what is expected and unlike the other data. These are called outliers and often machine learning modeling and model skill in general can be improved by understanding and even removing these outlier values.

After completing this tutorial, you will know:

- That an outlier is an unlikely observation in a dataset and may have one of many causes.

- How to use simple univariate statistics like standard deviation and interquartile range to identify and remove outliers from a data sample.

- How to use an outlier detection model to identify and remove rows from a training dataset in order to lift predictive modeling performance.

This tutorial is divided into five parts; they are:

- What are Outliers?

- Test Dataset

- Standard Deviation Method

- Interquartile Range Method

- Automatic Outlier Detection

1. What are Outliers?

An outlier is an observation that is unlike the other observations. They are rare, distinct, or do not fit in some way.

Outliers can have many causes, such as:

- Measurement or input error.

- Data corruption.

- True outlier observation.

Nevertheless, we can use statistical methods to identify observations that appear to be rare or unlikely given the available data.

This does not mean that the values identified are outliers and should be removed.

A good tip is to consider plotting the identified outlier values, perhaps in the context of non-outlier values to see if there are any systematic relationship or pattern to the outliers.

2.

Test Dataset

We will generate a population 10,000 random numbers drawn from a Gaussian distribution with a mean of 50 and a standard deviation of 5. Numbers drawn from a Gaussian distribution will have outliers.

# generate gaussian data

from numpy.random import seed

from numpy.random import randn

from numpy import mean

from numpy import std

# seed the random number generator

seed(1)

# generate univariate observations

data = 5 * randn(10000) + 50

# summarize

print('mean=%.3f stdv=%.3f' % (mean(data), std(data)))

from numpy.random import seed

from numpy.random import randn

from numpy import mean

from numpy import std

# seed the random number generator

seed(1)

# generate univariate observations

data = 5 * randn(10000) + 50

# summarize

print('mean=%.3f stdv=%.3f' % (mean(data), std(data)))

-----Result-----

mean=50.049 stdv=4.994

3. Standard Deviation Method

If we know that the distribution of values in the sample is Gaussian or Gaussian-like, we can use the standard deviation of the sample as a cut-off for identifying outliers.

# identify outliers with standard deviation

from numpy.random import seed

from numpy.random import randn

from numpy import mean

from numpy import std

# seed the random number generator

seed(1)

# generate univariate observations

data = 5 * randn(10000) + 50

# calculate summary statistics

data_mean, data_std = mean(data), std(data)

# define outliers

cut_off = data_std * 3

lower, upper = data_mean - cut_off, data_mean + cut_off

# identify outliers

outliers = [x for x in data if x < lower or x > upper]

print('Identified outliers: %d' % len(outliers))

# remove outliers

outliers_removed = [x for x in data if x >= lower and x <= upper]

print('Non-outlier observations: %d' % len(outliers_removed))

from numpy.random import seed

from numpy.random import randn

from numpy import mean

from numpy import std

# seed the random number generator

seed(1)

# generate univariate observations

data = 5 * randn(10000) + 50

# calculate summary statistics

data_mean, data_std = mean(data), std(data)

# define outliers

cut_off = data_std * 3

lower, upper = data_mean - cut_off, data_mean + cut_off

# identify outliers

outliers = [x for x in data if x < lower or x > upper]

print('Identified outliers: %d' % len(outliers))

# remove outliers

outliers_removed = [x for x in data if x >= lower and x <= upper]

print('Non-outlier observations: %d' % len(outliers_removed))

-----Result-----

Identified outliers: 29

Non-outlier observations: 9971

Non-outlier observations: 9971

You can use the same approach if you have multivariate data, e.g. data with multiple variables, each with a different Gaussian distribution.

You can imagine bounds in two dimensions that would define an ellipse if you have two variables. Observations that fall outside of the ellipse would be considered outliers.

In three dimensions, this would be an ellipsoid, and so on into higher dimensions. Alternately, if you knew more about the domain, perhaps an outlier may be identified by exceeding the limits on one or a subset of the data dimensions.

4. Interquartile Range Method

Not all data is normal or normal enough to treat it as being drawn from a Gaussian distribution.

A good statistic for summarizing a non-Gaussian distribution sample of data is the Interquartile Range, or IQR for short.

The IQR is calculated as the difference between the 75th and the

25th percentiles of the data and defines the box in a box and whisker plot.

25th percentiles of the data and defines the box in a box and whisker plot.

We refer to the percentiles as quartiles (quart meaning 4) because the data is divided into four groups via the 25th, 50th and 75th values. The IQR defines the middle 50 percent of the data, or the body of the data.

Statistics-based outlier detection techniques assume that the normal data points would appear in high probability regions of a stochastic model, while outliers would occur in the low probability regions of a stochastic model.

The IQR can be used to identify outliers by defining limits on the sample values that are a factor k of the IQR below the 25th percentile or above the 75th percentile. The common value for the factor k is the value 1.5. A factor k of 3 or more can be used to identify values that are extreme outliers or far outs when described in the context of box and whisker plots.

# identify outliers with interquartile range

from numpy.random import seed

from numpy.random import randn

from numpy import percentile

# seed the random number generator

seed(1)

# generate univariate observations

data = 5 * randn(10000) + 50

# calculate interquartile range

q25, q75 = percentile(data, 25), percentile(data, 75)

iqr = q75 - q25

print('Percentiles: 25th=%.3f, 75th=%.3f, IQR=%.3f' % (q25, q75, iqr))

# calculate the outlier cutoff

cut_off = iqr * 1.5

lower, upper = q25 - cut_off, q75 + cut_off

# identify outliers

outliers = [x for x in data if x < lower or x > upper]

print('Identified outliers: %d' % len(outliers))

# remove outliers

outliers_removed = [x for x in data if x >= lower and x <= upper]

print('Non-outlier observations: %d' % len(outliers_removed))

from numpy.random import seed

from numpy.random import randn

from numpy import percentile

# seed the random number generator

seed(1)

# generate univariate observations

data = 5 * randn(10000) + 50

# calculate interquartile range

q25, q75 = percentile(data, 25), percentile(data, 75)

iqr = q75 - q25

print('Percentiles: 25th=%.3f, 75th=%.3f, IQR=%.3f' % (q25, q75, iqr))

# calculate the outlier cutoff

cut_off = iqr * 1.5

lower, upper = q25 - cut_off, q75 + cut_off

# identify outliers

outliers = [x for x in data if x < lower or x > upper]

print('Identified outliers: %d' % len(outliers))

# remove outliers

outliers_removed = [x for x in data if x >= lower and x <= upper]

print('Non-outlier observations: %d' % len(outliers_removed))

-----Result-----

Percentiles: 25th=46.685, 75th=53.359, IQR=6.674

Identified outliers: 81

Non-outlier observations: 9919

Identified outliers: 81

Non-outlier observations: 9919

The approach can be used for multivariate data by calculating the limits on each variable in the dataset in turn, and taking outliers as observations that fall outside of the rectangle or hyper-rectangle.

5. Automatic Outlier Detection

The local outlier factor, or LOF for short, is a technique that attempts to harness the idea of nearest neighbors for outlier detection.

Each example is assigned a scoring of how isolated or how likely it is to be outliers based on the size of its local neighborhood. Those examples with the largest score are more likely to be outliers.

The scikit-learn library provides an implementation of this approach in the LocalOutlierFactor class.

We will use the Boston housing regression problem that has 13 inputs and one numerical target and requires learning the relationship between suburb characteristics and house prices.

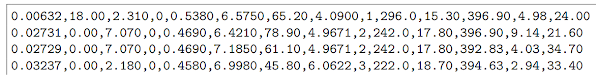

|

| Sample of the first few rows of the housing dataset

|

# evaluate model on the raw dataset

from pandas import read_csv

from sklearn.model_selection import train_test_split

from sklearn.linear_model import LinearRegression

from sklearn.metrics import mean_absolute_error

# load the dataset

df = read_csv('housing.csv', header=None)

# retrieve the array

data = df.values

# split into input and output elements

X, y = data[:, :-1], data[:, -1]

# split into train and test sets

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.33, random_state=1)

# fit the model

model = LinearRegression()

model.fit(X_train, y_train)

# evaluate the model

yhat = model.predict(X_test)

# evaluate predictions

mae = mean_absolute_error(y_test, yhat)

print('MAE: %.3f' % mae)

# evaluate predictions

mae = mean_absolute_error(y_test, yhat)

print('MAE: %.3f' % mae)

-----Result-----

MAE: 3.417

# evaluate model on training dataset with outliers removed

from pandas import read_csv

from sklearn.model_selection import train_test_split

from sklearn.linear_model import LinearRegression

from sklearn.neighbors import LocalOutlierFactor

from sklearn.metrics import mean_absolute_error

# load the dataset

df = read_csv('housing.csv', header=None)

# retrieve the array

data = df.values

# split into input and output elements

X, y = data[:, :-1], data[:, -1]

# split into train and test sets

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.33, random_state=1)

# summarize the shape of the training dataset

print(X_train.shape, y_train.shape)

# identify outliers in the training dataset

lof = LocalOutlierFactor()

yhat = lof.fit_predict(X_train)

# select all rows that are not outliers

mask = yhat != -1

X_train, y_train = X_train[mask, :], y_train[mask]

# summarize the shape of the updated training dataset

print(X_train.shape, y_train.shape)

# fit the model

model = LinearRegression()

model.fit(X_train, y_train)

# evaluate the model

yhat = model.predict(X_test)

# evaluate predictions

mae = mean_absolute_error(y_test, yhat)

print('MAE: %.3f' % mae)

from pandas import read_csv

from sklearn.model_selection import train_test_split

from sklearn.linear_model import LinearRegression

from sklearn.neighbors import LocalOutlierFactor

from sklearn.metrics import mean_absolute_error

# load the dataset

df = read_csv('housing.csv', header=None)

# retrieve the array

data = df.values

# split into input and output elements

X, y = data[:, :-1], data[:, -1]

# split into train and test sets

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.33, random_state=1)

# summarize the shape of the training dataset

print(X_train.shape, y_train.shape)

# identify outliers in the training dataset

lof = LocalOutlierFactor()

yhat = lof.fit_predict(X_train)

# select all rows that are not outliers

mask = yhat != -1

X_train, y_train = X_train[mask, :], y_train[mask]

# summarize the shape of the updated training dataset

print(X_train.shape, y_train.shape)

# fit the model

model = LinearRegression()

model.fit(X_train, y_train)

# evaluate the model

yhat = model.predict(X_test)

# evaluate predictions

mae = mean_absolute_error(y_test, yhat)

print('MAE: %.3f' % mae)

-----Result-----

(339, 13) (339,)

(305, 13) (305,)

MAE: 3.356

(305, 13) (305,)

MAE: 3.356

Firstly, we can see that the number of examples in the training dataset has been reduced from 339 to 305, meaning 34 rows containing outliers were identified and deleted. We can also see a reduction in MAE from about 3.417 by a model fit on the entire training dataset, to about 3.356 on a model fit on the dataset with outliers removed.

The Scikit-Learn library provides other outlier detection algorithms that can be used in the same way such as the IsolationForest algorithm.

No comments:

Post a Comment