A sparse matrix is a matrix that is comprised of mostly zero values. Sparse matrices are distinct from matrices with mostly non-zero values, which are referred to as dense matrices.

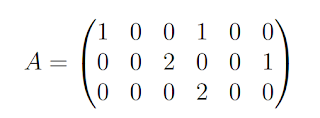

Below is an example of a small 3 × 6 sparse matrix

The example has 13 zero values of the 18 elements in the matrix, giving this matrix a sparsity score of 0.722 or about 72%.

A. Problems with Sparsity

Sparse matrices can cause problems with regards to space and time complexity.

Space Complexity

Very large matrices require a lot of memory, and some very large matrices that we wish to work with are sparse.

In practice, most large matrices are sparse | almost all entries are zeros.

Time Complexity

Assuming a very large sparse matrix can be fit into memory, we will want to perform operations on this matrix. Simply, if the matrix contains mostly zero-values, i.e. no data, then performing operations across this matrix may take a long time where the bulk of the computation performed will involve adding or multiplying zero values together.

B. Sparse Matrices in Machine Learning

Sparse matrices turn up a lot in applied machine learning. In this section, we will look at some common examples to motivate you to be aware of the issues of sparsity.

Data

Sparse matrices come up in some specific types of data, most notably observations that record the occurrence or count of an activity. Three examples include:

- Whether or not a user has watched a movie in a movie catalog.

- Whether or not a user has purchased a product in a product catalog.

- Count of the number of listens of a song in a song catalog.

Data Preparation

Sparse matrices come up in encoding schemes used in the preparation of data. Three common examples include:

- One hot encoding, used to represent categorical data as sparse binary vectors.

- Count encoding, used to represent the frequency of words in a vocabulary for a document

- TF-IDF encoding, used to represent normalized word frequency scores in a vocabulary.

Areas of Study

Some areas of study within machine learning must develop specialized methods to address sparsity directly as the input data is almost always sparse. Three examples include:

- Natural language processing for working with documents of text.

- Recommender systems for working with product usage within a catalog.

- Computer vision when working with images that contain lots of black pixels.

If there are 100,000 words in the language model, then the feature vector has length 100,000, but for a short email message almost all the features will have count zero.

C. Working with Sparse Matrices

The solution to representing and working with sparse matrices is to use an alternate data structure to represent the sparse data. The zero values can be ignored and only the data or non-zero values in the sparse matrix need to be stored or acted upon. There are multiple data structures that can be used to efficiently construct a sparse matrix; three common examples are listed below.

- Dictionary of Keys. A dictionary is used where a row and column index is mapped to a value.

- List of Lists. Each row of the matrix is stored as a list, with each sublist containing the column index and the value.

- Coordinate List. A list of tuples is stored with each tuple containing the row index, column index, and the value.

There are also data structures that are more suitable for performing efficient operations; two commonly used examples are listed below.

- Compressed Sparse Row. The sparse matrix is represented using three one-dimensional arrays for the non-zero values, the extents of the rows, and the column indexes.

- Compressed Sparse Column. The same as the Compressed Sparse Row method except the column indices are compressed and read first before the row indices.

D. Sparse Matrices in Python

SciPy provides tools for creating sparse matrices using multiple data structures, as well as tools for converting a dense matrix to a sparse matrix. Many linear algebra NumPy and SciPy functions that operate on NumPy arrays can transparently operate on SciPy sparse arrays. Further, machine learning libraries that use NumPy data structures can also operate transparently on SciPy sparse arrays, such as scikit-learn for general machine learning and Keras for deep learning.

# sparse matrix

from numpy import array

from scipy.sparse import csr_matrix

# create dense matrix

A = array([

[1, 0, 0, 1, 0, 0],

[0, 0, 2, 0, 0, 1],

[0, 0, 0, 2, 0, 0]])

print(A)

# convert to sparse matrix (CSR method)

S = csr_matrix(A)

print(S)

# reconstruct dense matrix

B = S.todense()

print(B)

from numpy import array

from scipy.sparse import csr_matrix

# create dense matrix

A = array([

[1, 0, 0, 1, 0, 0],

[0, 0, 2, 0, 0, 1],

[0, 0, 0, 2, 0, 0]])

print(A)

# convert to sparse matrix (CSR method)

S = csr_matrix(A)

print(S)

# reconstruct dense matrix

B = S.todense()

print(B)

-----Result-----

[[1 0 0 1 0 0]

[0 0 2 0 0 1]

[0 0 0 2 0 0]]

[0 0 2 0 0 1]

[0 0 0 2 0 0]]

(0, 0) 1

(0, 3) 1

(1, 2) 2

(1, 5) 1

(2, 3) 2

[[1 0 0 1 0 0]

[0 0 2 0 0 1]

[0 0 0 2 0 0]]

# sparsity calculation

from numpy import array

from numpy import count_nonzero

# create dense matrix

A = array([

[1, 0, 0, 1, 0, 0],

[0, 0, 2, 0, 0, 1],

[0, 0, 0, 2, 0, 0]])

print(A)

# calculate sparsity

sparsity = 1.0 - count_nonzero(A) / A.size

print(sparsity)

from numpy import array

from numpy import count_nonzero

# create dense matrix

A = array([

[1, 0, 0, 1, 0, 0],

[0, 0, 2, 0, 0, 1],

[0, 0, 0, 2, 0, 0]])

print(A)

# calculate sparsity

sparsity = 1.0 - count_nonzero(A) / A.size

print(sparsity)

-----Result-----

[[1 0 0 1 0 0]

[0 0 2 0 0 1]

[0 0 0 2 0 0]]

[0 0 2 0 0 1]

[0 0 0 2 0 0]]

0.7222222222222222

No comments:

Post a Comment